Moving to the Cloud & Company Transformation

From Webinar GoogleNext ’19 Presented by

Thomas Martin, Founder, NephōSec | Brian Johnson, Founder, DivvyCloud

In 2019, Thomas Martin, Founder and President of NephōSec, and Brian Johnson, founder of DivvyCloud, discussed their experiences in migrating traditional IT assets to the cloud at Google Cloud Next ’19. What follows in the video and slides of the presentation demonstrates the paradigm shift needed within the organization in how it views migration to the cloud and the transformative effect it can have on the organization itself.

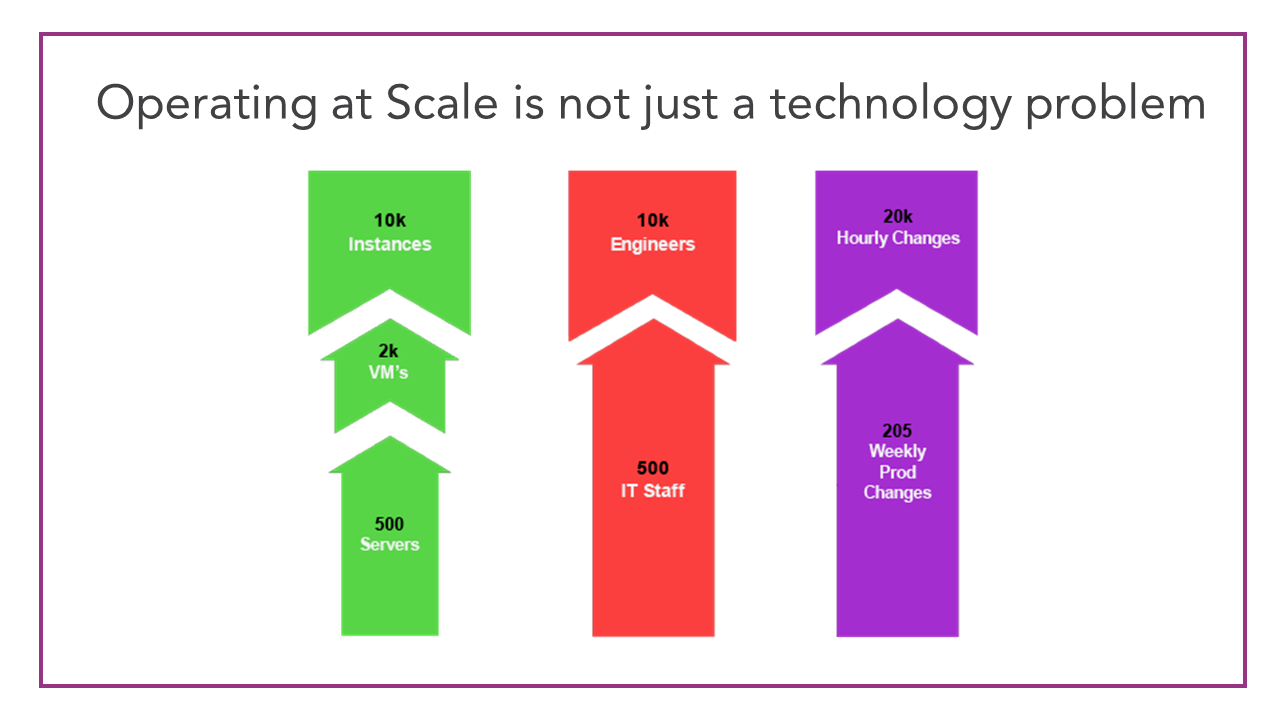

As we adopted cloud technology, we started treating it like a technology problem, but that actually wasn’t the case. It was a culture problem. What we realized was that the shift to self-service was incredibly important for our ability to compete. All of our competitors were out there building products, but doing it much faster and getting those products to market. How could we continue to do that? How could we continue to innovate? We couldn’t do that if IT continued to get in the way. So we need to find a way to allow our engineering organizations to access the cloud, deploy applications, and innovate through cloud infrastructure without IT and security getting in the way of that. Through that process, we discovered it wasn’t just a technology problem. It was 3 things that came together to create an issue for security.

- The number of resources managed

- The number of people touching the infrastructure

- How often those resources are changing

These 3 things combined led to this incredibly difficult problem. How do we deal with this at scale? How could we possibly, as an organization, understand everything that was changing and react to it in a reasonable amount of time based on traditional security and IT processes that we had in place which were super slow? That was the problem that we see out there. This is not a technology issue, it’s a “company transformation” issue. How do you get ahead of this and how do you deal with scale?

So what does this mean from a practical aspect?

This is a Simple 3-Tier Architecture. The opportunities for misconfiguration seem quite small…You’ve got a load balancer, a couple of computes spanning across a couple of availability zones, cloud storage, and a cloud sequel. If you are managing this at a small scale with a single team, it’s not all that hard. However, when you start looking at it, there are at least 20+ opportunities for misconfiguration just in this simple 3-tier architecture. Think about that when you begin to migrate 5, 7, 10 thousand applications across an enterprise. Trying to manage this at any kind of scale becomes unwieldy.

What I have found in my past experiences is that between 100 – 200 applications is when the whole structure starts to fall. You have to begin thinking about, not only is the CI/CD process important, but all the configurations, both in real-time upon deployment and going forward.

So what does this lead to?

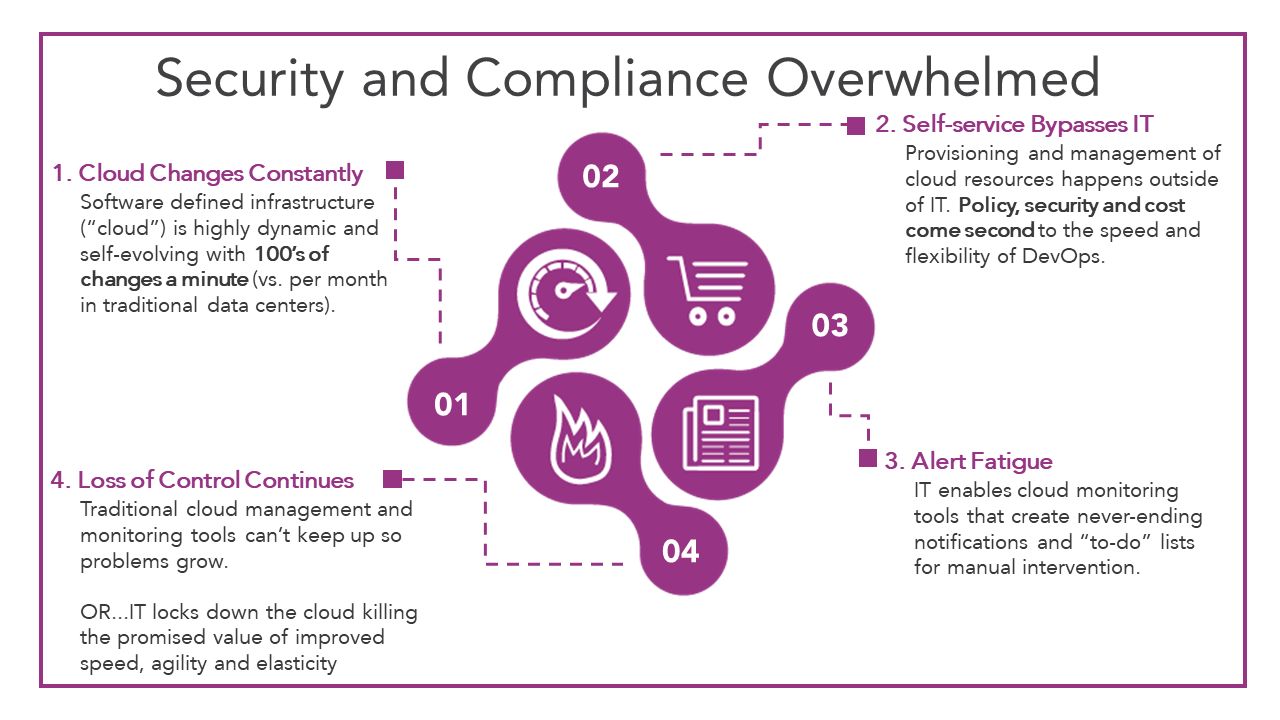

In our case, it led to a couple of consequences. First, it led to the loss of control. We are letting engineers deploy. That’s a great thing. You want that innovation. You need that innovation. T survive, the company has to find a way to compete through innovation. It’s important but you lose control. It used to be that any changes you made in the infrastructure went through us first so we would know a problem. We would see a mistake and be able to stop it. That is not necessarily the case any more. All things considered, this happened quickly. So you went from having an IT organization that had processes in place to be able to catch these issues through their controls and gateways, to engineers doing all sorts of things all over the place. The problem is nobody sat down the engineering organization and explained to them 20 years of history of security issues that we hit. It’s not like IT learned that stuff the easy way. We got compromised, we had problems, and we had issues, but learned and built processes. Unfortunately, those processes slowed us down.

Secondly, when we started to move toward a more cloud-native approach, we thought we would just do alerting. We’d get alerts every time there was something we needed to pay attention to, but that quickly got out of control. It just became “Whack-A-Mole”. There was no way to keep up with it causing alert fatigue. We were getting Slack messages or emails and not knowing which ones to pay attention to or where the important areas were. In reality, of the 20,000 changes an hour that you are dealing with, there’s going to be one of those messages or emails that might be important, and you might have a hard time identifying which one of those you need to pay attention to.

Noise + Signal

This is really a signal and noise problem. How do you reduce all of the noise with everything going on, so you can focus on the signal? The answer is to leverage automation. There is just no way that we can leverage traditional IT processes to use a run book, correct problems, or contact the person to talk to them about making the change. By the time that has occurred, the application has been torn down and redeployed three times. So you need to be able to get rid of the noise by leveraging automation, so that your IT staff, your security staff, your SecOps, and your CloudOps have the ability to focus on that 10% that they need to be dealing with on a more manual and active basis.

Traditional IT and Security Processes Are Ineffective

Traditional IT perimeter and processes that we have always used and relied upon are ineffective. You just can’t handle those kinds of changes at scale. They are still important. I’m not mitigating the importance of perimeter control. But how do we filter out the noise? For those of you who are working for those large enterprise firms, think about the IT procurement process. In their head, the development teams committed probably an extra 60 to 90 days to the schedule. By the time things get through procurement, the backlog of servers making it to the data center, the time they rack it, put it in, and put up the operating system and get it networked, we are looking at somewhere between 90 to 120 days. I’ve seen even up to 180 days to get procured servers into the data center. Those kinds of processes, when you are trying to stand up something to try to detect it and resolve it, that resource may have already been built and gone. You have to be able to move more quickly.

When you are going through this process, working with the engineers and talking about the problems that they are going to face when they start to adopt the cloud, will help your team get on the same page. Sometimes people don’t understand the scale of the attack surface or the offensive nature of what is going on out there. We used to have an exercise we would do with our engineers when they came on board. We’d have them deploy a server into a secured environment where Port 22 is open to the world and completely publicly accessible, then set root account and password upon log-in. We just have them time it. How long before that box gets popped? Sometimes when you go through that exercise, it opens your eyes to the number of things that are out there scanning and looking, trying to find ways in. Ten or twelve years ago, there was an increase in the number of sophisticated exploits that were being developed. That’s actually started dovetailing down a little bit primarily because it’s not necessary anymore. People are out there opening up sf3 buckets or leaving databases open to the world so it’s not necessary to spend time, energy, and resources on those really complex exploits when you can just scan and find a way in. An important part of this equation is not only understanding the security professionals and what scale looks like internally, but also training the engineering organization about what’s important about security, how they need to deploy, and how they need to think about people trying to get in. When you can teach them as they go through this process, everyone’s going to get better, everyone’s going to get faster and more innovative.

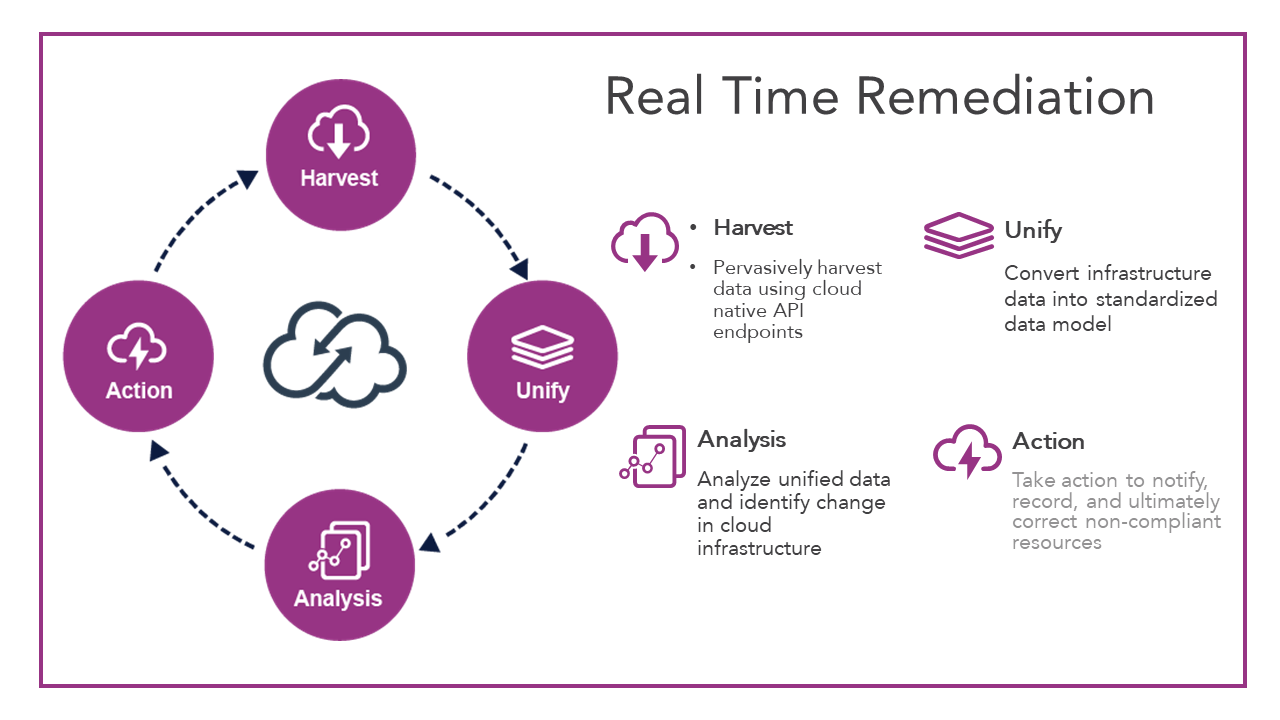

What would be a service level agreement (SLA) from a typical event in a data center to when you’re going to respond? How much data could be lost with that cloud storage open to the world? Thinking about it from a remediation standpoint, it needs to be near real time. As you go around, it’s really starting at a harvesting point, utilizing all the access points, those APIs across all those resources, and harvesting them back real time, not only upon creation but actually upon change, that day two drift to also think about. Things might have been great as you deployed it out of the CI/CD toolchain but what happened after that point with that engineer? I don’t believe people intentionally create a lot of the configuration mistakes. It’s that middle of the night, day 2 Ops when something is wrong, “I’ll change that back as soon as it’s resolved”, and it doesn’t get resolved. It doesn’t get flipped back.

So, you first have to harvest that data back in. Then you want to unify it so that it’s consistent across all of your individual accounts and all of your virtual private clouds (VPCs). All those resources are then normalized into a single data plane. Then you want to drive analysis against it. Establish compliance and security policies based on what it means to be compliant to your organization. In real-time, analyze those resources and then take action. What do I want to happen when this occurs? It’s that if, then scenario. If Port 22 is open to the world, what do I want to do? Who do I want to wake up? What immediate action do I want to take, not only to protect the company, but from an investigative, forensics, and educational perspective as well? Was it the team who inadvertently did it to resolve an issue? Or were we actually breached? So all that data is captured and dumped off for analytics.

Several years ago, Google announced the multicloud push. This is absolutely the right way to go. Kubernetes is going to be the element that breaks down the barriers and commoditizes infrastructure. It’s going to be really important from the enterprise organization perspective and when you’re looking at the infrastructure layer. You can have a unified model because you’re going to have engineers that are using Azure, you’re going to have engineers that are using GCP, you’re going to have engineers using Amazon. You can’t build policies that are going to be just living in those worlds because you’re going to forget about them, and they are going to sort of die on the vine or go on in different ways. As we know in security, it doesn’t matter if you have 95% coverage. That 5 % is the one that’s going to get you. So you need to make sure you create a holistic strategy and a holistic policy as you move forward.

So how do you do that?

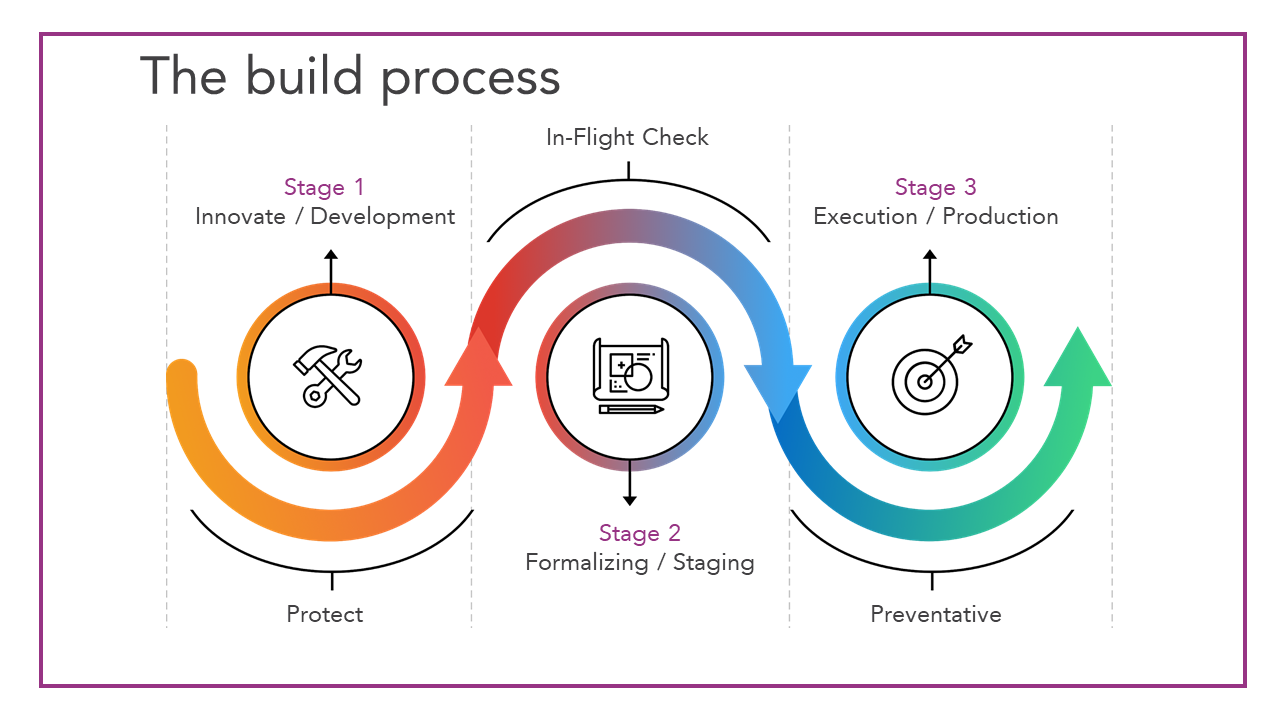

There are lots of different ways to think about dealing with this by using remediation. In a development environment, your remediation might be slightly different than your production environment. In your development environment, you want to do some latency testing. When working for a big bank, we were not allowed to have servers outside of the United States but you want to do some latency testing. So in the development environment, you can spin up an instance in Asia pack for the next two hours. Two hours later, the system is automatically going to come back and clean it up and make sure everything is ok. But when you move that same application to staging, you may not actually have the ability to do that. You might leverage faster remediation. A server comes upon an Asia pack, it’s killed instantly. You still want to allow them to have that ability to try new services and do more things. You don’t want to lock them down using preventative controls because you need them to go in there and try new things, you need them to innovate. If you block them at the top layer, they’re just going to go around you. They’ll go create an account and try it themselves. That’s the worst place you can be in. Being compromised is bad. Being compromised and not knowing it is way worse. Embrace this. Help them innovate. Help them learn. Go through that process with them and leverage remediation in real-time to be able to provide flexibility about how they do that. Then when you get to production, this is where you may want to leverage some preventative controls. The cloud providers today provide different ways to do preventative controls and lock down certain services from being used. As you are taking your engineer through this journey, you want them, at each stage, to understand it’s a little bit more stringent, a little bit tighter, it’s a little bit harder to do what you’re going to do if it doesn’t fit inside the parameters of what we’ve approved. So that when they get to production, it just doesn’t work. They are not surprised when they get there because the whole way through the journey you’ve been teaching them. What’s more important about that is, it’s not just about enforcing a policy and then running away, but it’s about engaging them. It’s about bringing them into the conversation and saying what is it you are trying to accomplish? What are you trying to do? Let me help you find a secure way of doing that. Help them innovate and help them along that journey.

Think about it as this funnel of restriction. You’re providing guardrails that are much wider in that early stage to generate innovation but by the time you are at stage three, it’s least privileged. In many cases, it’s going to be machine-only privileges that are enabled in production to be able to run those services.

Layers of Security Mindset for Self-Service Adoption

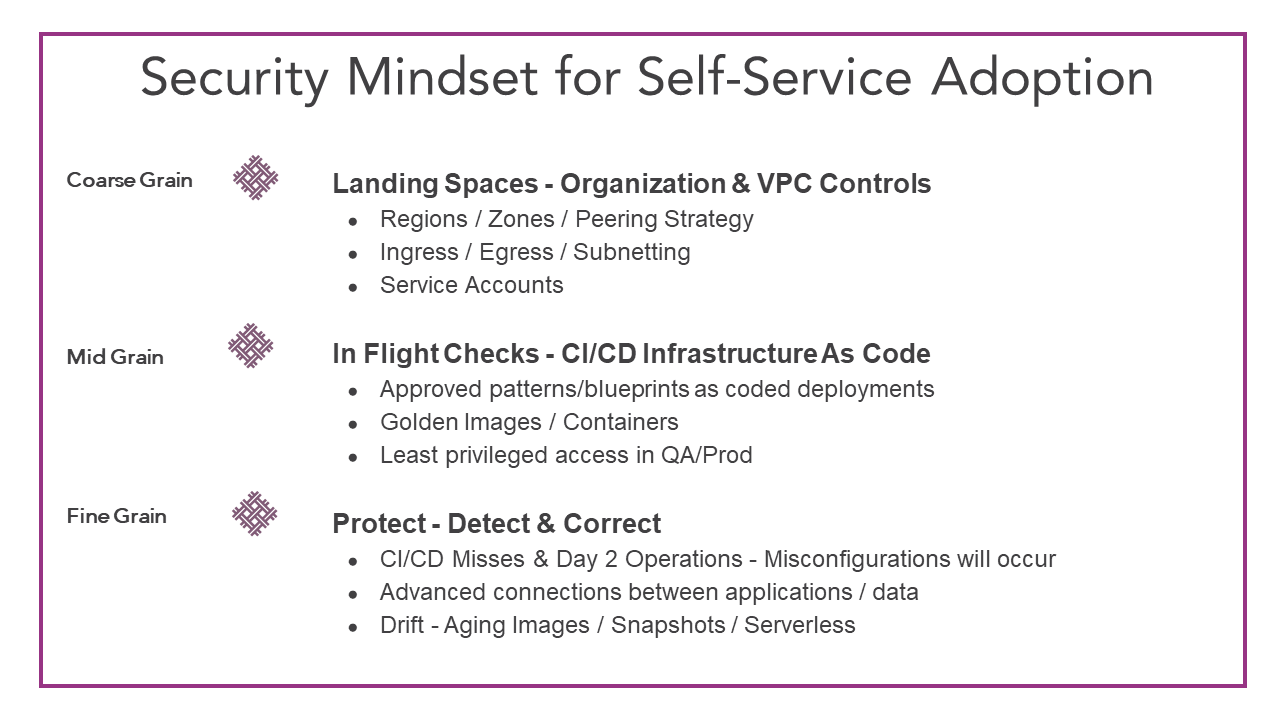

You can think about it as super fine grain up to coarse grain.

Protect mode is where you leverage real-time remediation to clean things up. Some of these things could be security groups, identifying databases that have not been accessed in a long time, or something that is down. In cleaning up elements you are protecting your environment on a regular basis.

Inflight checks are your ability to take things like terraform, helm chart, or cloud formation templates to deploy and provision into your environment. During infrastructure and resource deployment, you will want to validate security. There are tools available to assist you with this real-time security validation. As you go through the CI/CD process, tools can check over the system. Tools can identify issues like “I’m about to build these ten resources and this is what it looks like, is this ok?” Have the CI/CD process then either pass it and say “Yes, you are allowed to do this development but we are going to warn you that this is a problem.” or actually fail the build. You will want to integrate the pipeline builds to bring security into their world through the inflight checks, not the other way around. Inflight checks are really important.

Finally, think about provisioning accounts in an automated fashion. When you do that for projects or teams, you place controls at provisioning time. This might be a mixture of remediation and preventative measures. You could force the ability through a CI/CD pipeline where it is getting validated as it deploys. This tightens security as you move into production and preventative accounts.

The layers of Security work in combination. The coarse gain controls or big mindsets state that these are never to be violated. The mid grain is where you may put a warning in the Dev cycle. Don’t immediately shut it down because you are facing into that cultural shift. You’ll want to educate engineering as to why we are going in that direction. The fine grain controls, not only protects upon launch, but also that drift that can occur day 2 through day 30. These layers of Security give us, the leaders, the ability to become more the department of “Yes”, to drive innovation for your company, versus the department of “No”.

The Importance of Having a CloudOps Team

When filtering out the noise, we can’t rely on the traditional perimeter security control and solely provide notification. It’s great to know that there is a theft happening in “aisle 5” but have we filtered out the noise enough so that we know exactly where the attack is happening, what is happening, how to resolve it, and be able to take that action to remediate it in a time of cloud speed? The companies that have the most success are the ones that are able to get moving the quickest and have an established cloudOps team. When we talk about security, there is this desire to think about traditional infrastructure security, analyzing network traffic, identifying the external threats, and coming up with preventative measures to react to those threats. However, the security problem that we are facing right now is different from what we have seen before. You begin to think about things differently by previously doing professional exploit development from the offensive side, and it establishes new ways of thinking for your preventative processes. You are thinking about how to get into the black box. From the security side when you’re defending against that, you’re looking at traffic to try and figure out what people are throwing at you and what they know about you that you don’t know. When you are dealing with the cloudOps side of things and helping the engineering teams grow, it’s much more about understanding what they are doing, what their needs are, and making sure that they don’t make mistakes. It’s an internal threat that’s different because you are on the same side they are on. You’re not fighting with one another. You have to find a way to embrace that. What we’ve found that works is establishing a cloud center of excellence. Having a cloudOps team that’s going to be focused on security from a cloud perspective and what that means to the internal organization, means you get a lot more innovation quickly. Having that team is an accelerant, not only from an adoption perspective, but from an educational, cultural perspective across the entire organization as people begin to transition out of that data center mindset. In many cases, most organizations of that size are always going to be hybrid. They are going to have their data center with their large ERP systems and others that will remain on-prem but to be able to manage that mindset across the board, and it helps to have that cloudOps team.

You need to find a strategy for the organization. This takes us back to the beginning when we started talking about this not being just a technology problem. This is how businesses are going to transform. The introduction of the cloud doesn’t just change how you deploy applications. It changes what applications you build, what you take to market, and what product you stop development on because you’re able to do it faster. It’s a huge business transformation. So as you go through this process, you need to decide how security, IT, and cloudOps is going to address this. It’s important to think about this as a holistic strategy. In conclusion, we talked about those layers, all the way from development to production. Take into consideration how you engage and educate your engineering staff as your company adopts the cloud.